ONNX: Making Machine Learning models platform and language-independent

It is well known that the world of machine learning and artificial intelligence is constantly evolving. Typically, software developers and data scientists specialize in a single machine learning framework, thus becoming experts in the library of their preferred language. Unfortunately, this can be a limitation: one might face a significant obstacle whenever working with different technologies.

In this article, we will explore how ONNX is the key to breaking free from the reliance on different machine learning frameworks.

The Problem

Being an expert in all the frameworks and programming languages used for developing machine learning models is a real challenge today.

What happens when you need to work with a framework you’re not familiar with, or when a client requests a specific language? ONNX provides the solution to this problem.

What is ONNX and how it solves the problem

ONNX, short for Open Neural Network Exchange, is a platform and programming language-independent machine learning model format. For this reason, it is possible to develop a model in the preferred framework and then export it in this format.

If a client requests the use of a specific framework different from the one used to develop a model, thanks to ONNX, there is no problem. After exporting the original version of a model in this format, it can be imported in the same format into the required framework and use the appropriate ONNX runtime for inference.

ONNX allows you to easily adapt models to various languages without the need to be an expert in each of them.

Features of ONNX

This format is characterized by a series of features:

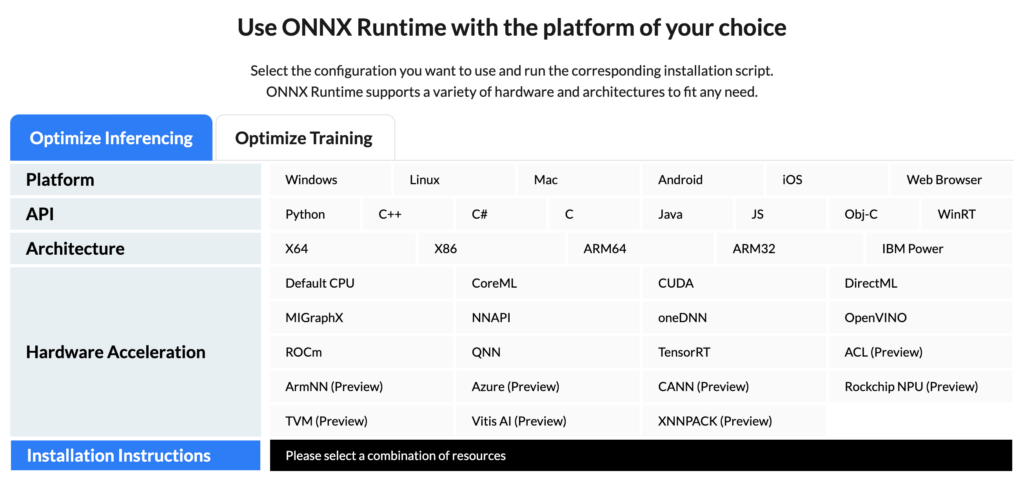

- Runtime: ONNX supports a wide range of runtimes for different platforms and programming languages. To use a model in a specific framework, it is enough to install the appropriate runtime.

- Opset: The version number of the opset indicates the mathematical functions supported by ONNX that a neural network can execute. Before exporting a model, it’s important to ensure that the opset is compatible with the functions used in the model. It’s crucial to consider the opset when working with models from this framework, as compatibility between different versions of the opset and ONNX runtime libraries may vary, affecting the portability of models across different platforms and languages.

- Training: You can train a model directly in ONNX.

- Optimization: ONNX offers a series of optimizations to enhance the execution performance of models, including parallelization of calculations, reduced precision inference, and optimization of underlying mathematical libraries. This makes it a popular choice for efficient execution of machine learning models in real-time applications or on devices with limited resources.

- Inference: Inference with machine learning models can be performed directly in the chosen ONNX runtime.

Example of ONNX Usage

To make the potential of the format even clearer, let’s consider a plausible use case.

A client requests the implementation of a machine learning model capable of correctly classifying whether an image is real or AI-generated.

In this scenario, it is assumed that the framework you are most experienced with is PyTorch in Python, but the client in question wants the model to be able to run in the Web browser using JavaScript.

Starting with the implementation of a model written in PyTorch, it can then be exported to ONNX through a short piece of code. Here’s an example:

import torch

from classes.Classifier import Classifier

model = Classifier.load_from_checkpoint("model.ckpt")

model.eval()

x = torch.randn(1, 3, 224, 224, requires_grad=True)

torch.onnx.export(model.model, # model being run

x, # model input (or a tuple for multiple inputs)

"model.onnx", # where to save the model (can be a file or file-like object)

export_params=True, # store the trained parameter weights inside the model file

opset_version=10, # the ONNX version to export the model to

do_constant_folding=True, # whether to execute constant folding for optimization

input_names=['input'], # the model's input names

output_names=['output']) # the model's output names

At this point, moving on to JavaScript, after installing the ONNX Runtime Web, you can import the model in ONNX format and run inference directly in the browser.

Below is a simple code example:

import * as ort from "onnxruntime-web";

// Load the model and create InferenceSession

const modelPath = "path/to/your/onnx/model";

const session = await ort.InferenceSession.create(modelPath);

// Load and preprocess the input image to inputTensor

...

// Run inference

const outputs = await session.run({ input: inputTensor });

console.log(outputs);

Conclusion

The example just described demonstrates how it is possible to develop a model in PyTorch, export it to ONNX format, and use it in JavaScript for inference, enabling effortless interoperability between different frameworks and programming languages.

It can be stated that the ONNX format has effectively become a standard. In fact, it has gained a crucial role in the world of machine learning, offering the possibility to work more flexibly, adapt to client needs, and break free from dependency on a single framework or language.