Real Time Knowledge Injection: enhance Chatbot Intelligence

Most chatbots use short-term memory, which limits their ability to store information and build autonomous knowledge.

In this article, originally published (in italian) on MokaByte, Luca Giudici presents a case study from the Memoria Business Unit, showing how his team implemented real-time knowledge injection — first by developing an RAG system, and later evolving it into an agent-based architecture with a dynamic knowledge base.

Chatbots and the current memory challenges

In recent years, both companies and users have embraced chatbots and virtual assistants as key tools for customer support and business process automation.

Thanks to their ability to interpret natural language, users often perceive these tools as “intelligent” and believe they can remember and reuse information shared during conversations.

In reality, most chatbots retain information only for the duration of a single session: they forget everything once the conversation ends and do not update their knowledge in real time.

Limits of short-time memory

This limitation is more than a technical detail — it’s a real challenge for companies that rely on chatbots for customer support.

I experienced this firsthand during a project for Memoria, where we developed a system based on a Retrieval-Augmented Generation (RAG) architecture to retrieve answers from industrial machinery technical manuals.

During testing, the client noticed an incorrect response caused by outdated documentation. After correcting the chatbot directly in the chat, they expected the assistant to automatically provide the updated answer in the next interaction.

The absence of persistent memory revealed a critical need: enabling the assistant to update its knowledge in real time, without waiting for the next knowledge base refresh cycle.

Integrating permanent memory into an AI assistant overcomes these limitations, enhancing accuracy, efficiency, and reliability of responses — and delivering a truly intelligent, dynamic user experience.

The RAG-Based Memoria solution

Our project is built on a RAG (Retrieval-Augmented Generation) architecture, which combines information retrieval with natural language generation powered by a LLM (Large Language Model).

We started by creating a structured knowledge base from technical manuals, processing the documentation and dividing it into text fragments. Each fragment is then converted into a numerical vector known as an embedding.

These embeddings are represented in a vector space, a mathematical environment where the position of each vector reflects the semantic meaning of the original text. Texts with similar content are located close together, while those with different meanings are farther apart.

This mechanism powers our semantic engine, which delivers accurate and contextual responses based on the knowledge base.

Architecture and implementation

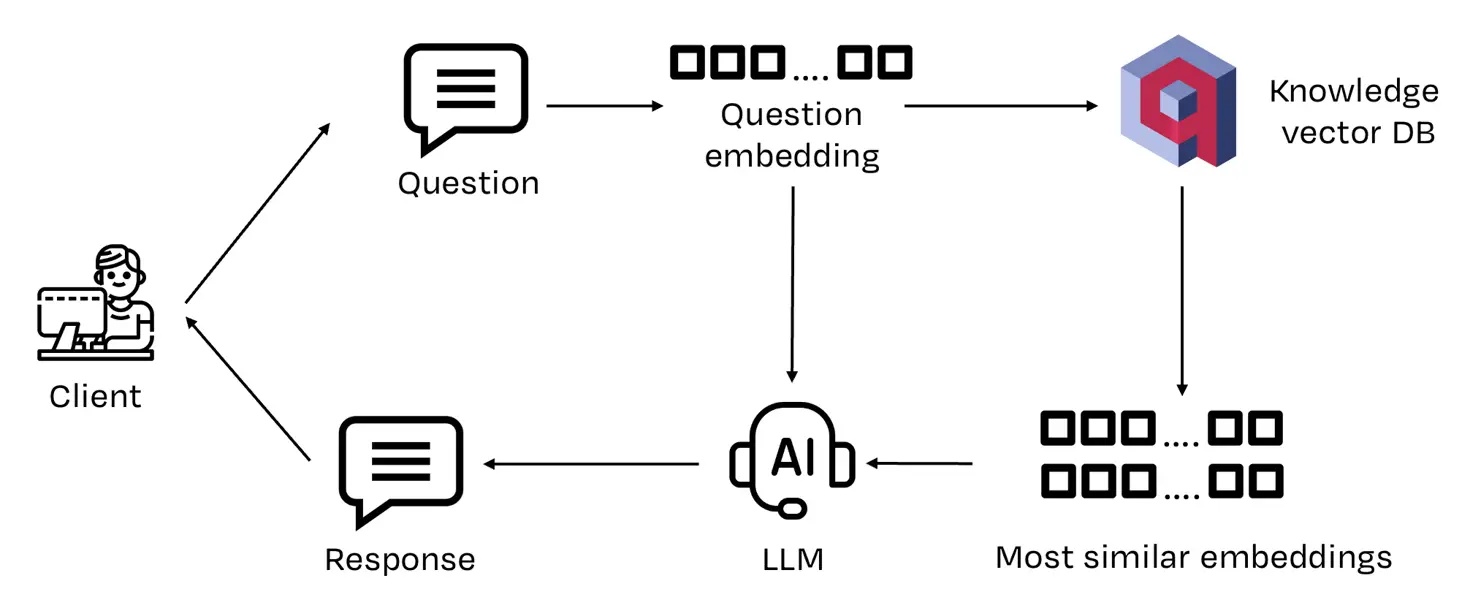

The first version of our solution had the following architecture.

As shown in the picture above, the first RAG architecture of a memory-enabled chatbot processes user queries through four main stages:

- Query reception: the chatbot receives the user’s question and prepares it for semantic processing.

- Semantic retrieval: the chatbot converts the query into a vector embedding and compares it within the semantic space to identify the most relevant text fragments, selecting the document sections that best match the request.

- Response generation: the language model takes the retrieved fragments and generates a coherent and accurate answer using the available context.

- Result delivery: the chatbot sends the final response back to the user, completing the interaction flow.

From classic RAG to a agent system

To update the chatbot’s knowledge in real time, we transformed the classic RAG architecture into a system of intelligent agents equipped with tools capable of independently deciding how to interact with the knowledge base.

In this context, a tool acts as a resource that the agent uses to expand its capabilities or perform specific operations beyond its native functions.

We have developed two main tools: one for updating the knowledge base and one for managing the conversation, both of which are essential for providing answers that are always up to date and relevant.

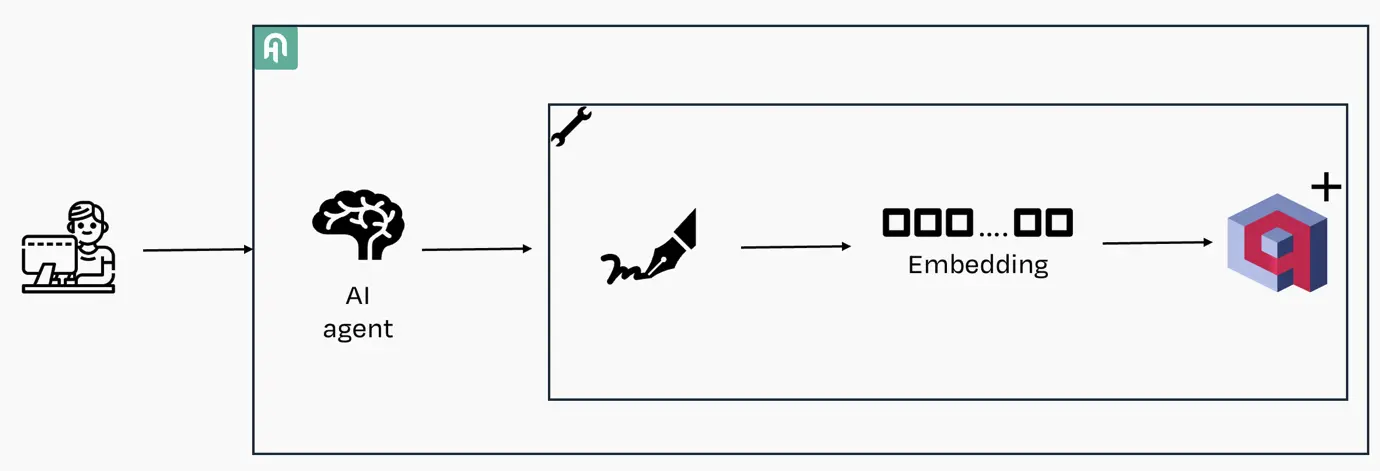

Knowledge base update tool

This tool kicks in when the user adds new information or corrects data. It generates the embedding of the updated text and saves it in a dedicated vector database, designed to manage the new knowledge of the chatbot or RAG system in real time, keeping the data consistent and easily retrievable at all times.

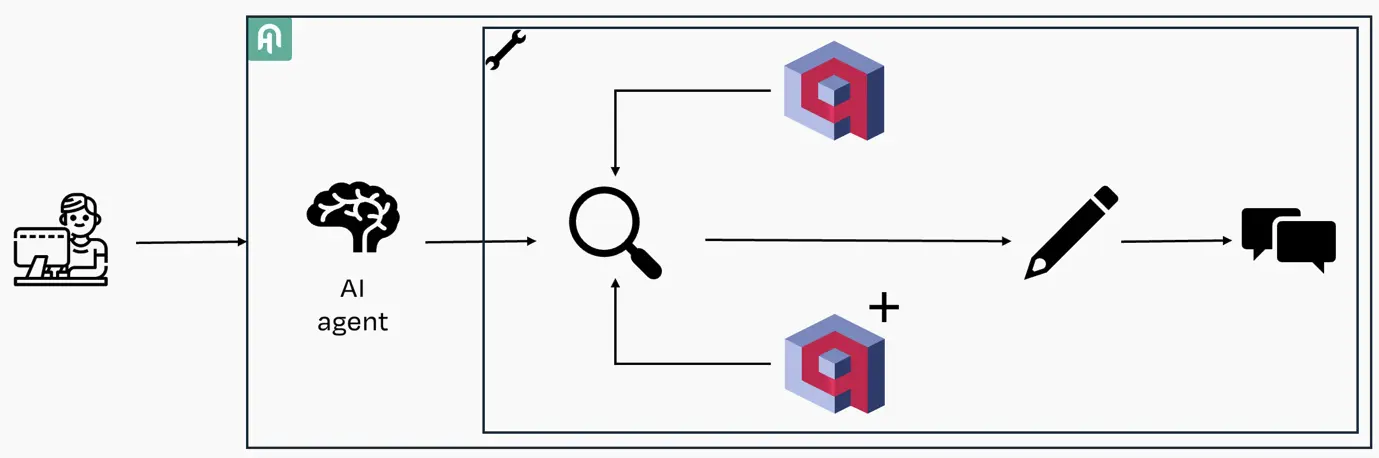

Conversation management tool

This RAG tool for chatbots kicks in every time the user asks a question. It queries both the original knowledge base and the real-time updated knowledge base, retrieves the most relevant text fragments, and sends them to the language model, which processes the accurate and contextual final response.

Final solution architecture

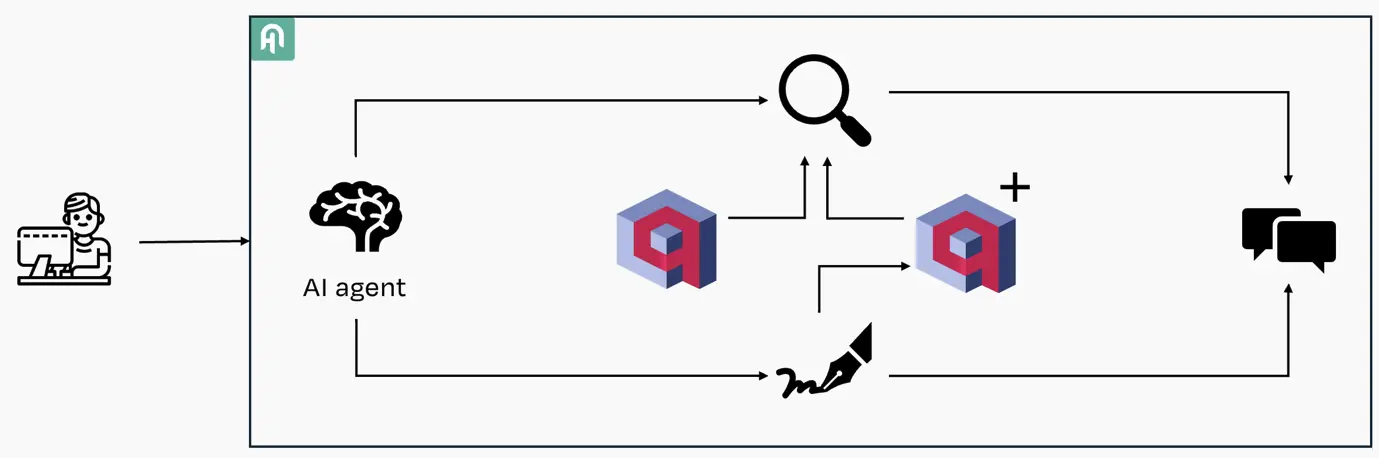

By integrating the two tools described above, the final architecture is as shown in the following picture.

The processing procedure

In the illustration below, you can see how an advanced chatbot based on an agent with knowledge injection works. The process for handling user queries involves four main stages:

- Query reception: the user submits a question, which is captured by the chatbot for processing.

- Selection of the appropriate tool: the agent analyzes the semantic meaning of the query to determine whether it involves a knowledge update or a simple information request. In case of an update, the message is directed to the knowledge base management tool; for informational queries, the conversation tool is used.

- Result merging: when the conversation tool is employed, the agent merges data from two distinct knowledge bases, ensuring that the response is always consistent and up to date.

- Response delivery: the collected information is processed by a LLM (Large Language Model) to generate an accurate and contextualized answer.

The use of two separate knowledge bases introduces the challenge of managing contradictions: if a piece of information exists in both bases but with different content, the system must determine which version is trustworthy. To address this issue, priority is given to the knowledge base with real-time updates, ensuring that responses are always based on the most recent data while ignoring outdated versions.

The advantages of separating the knowledge bases are mainly two:

- Simplified update management: insertions, corrections, and deletions become faster and safer without compromising the original knowledge base.

- Optimized vector database performance: updating the main knowledge base directly would require re-indexing all vectors, impacting performance. By keeping the two spaces separate, the system maintains high search speed even with frequent updates.

This strategy makes it possible to balance response accuracy, system maintainability, and search performance, making the chatbot more reliable, responsive, and capable of providing up-to-date answers to user queries.

Conclusions

The evolution from traditional RAG systems to those based on agents with dynamic knowledge bases eliminates one of the main limitations of chatbots: the inability to update information in real time.

This approach enhances response accuracy, improves user satisfaction, and reduces the time required to keep the knowledge base aligned with customer operational needs.

Looking ahead, the next step is to automate documentation updates. Once validated and consolidated, the information collected in real time within the knowledge base feeds the creation of new versions of user manuals and operational guides, keeping them up to date without complex manual processes.

In this way, the chatbot with knowledge injection not only supports users but also becomes an active engine for the continuous improvement of corporate technical documentation, optimizing the efficiency and reliability of information.